Quantifying Server Client Sync with Selected RigidBodies in Server’s Physics Step (Part 2)

In the last post, I described a method to report on prediction errors between server and client for a fast paced multi player game. In this article, I will describe why my conclusion was wrong, why, and support it with evidence.

There are a few good online resources that provide concrete code examples of lag compensation in multi player games. However, very few I’ve found take on more than one client in the example. This series of articles attempts to demonstrate just that, how they were improved upon and why using quantified evidence.

An authoritative server in multi player games will collect input message from clients in a list and then periodically run through the list, process inputs from players, advance the simulation, and send the simulation state to clients.

After rigging up the code with more than a dozen Boolean switches to turn on and off various pieces of lag compensation for testing, I incorrectly concluded the server should process all player inputs and then run one physics step.

Why was this wrong? After thinking more and going back to try one other idea which I’ll get to next, I realized the code was too complex and contained bugs, including redundant physics steps on the server that unfortunately were outside the scope of basic code snippets I tasked myself with posting.

So, after satisfying myself that the bugs were fixed, I re-ran the analysis from the first post using the same quantification and reporting code. It wasn’t quick or simple to build, and had its own bugs, but its value is clear in providing significant insight into what’s going on between server and client.

|  |

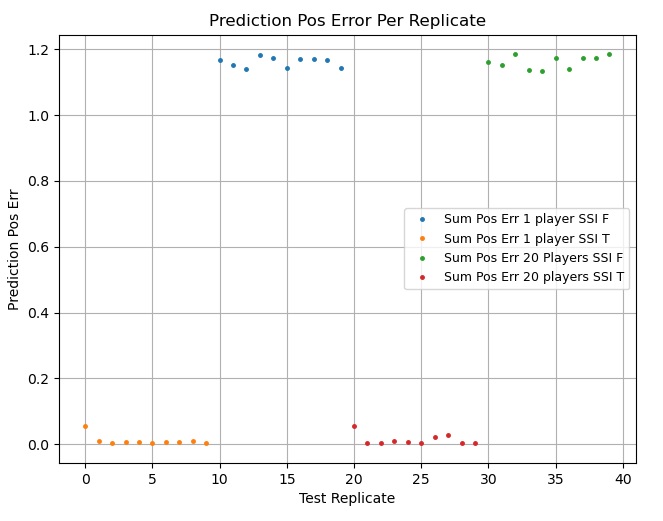

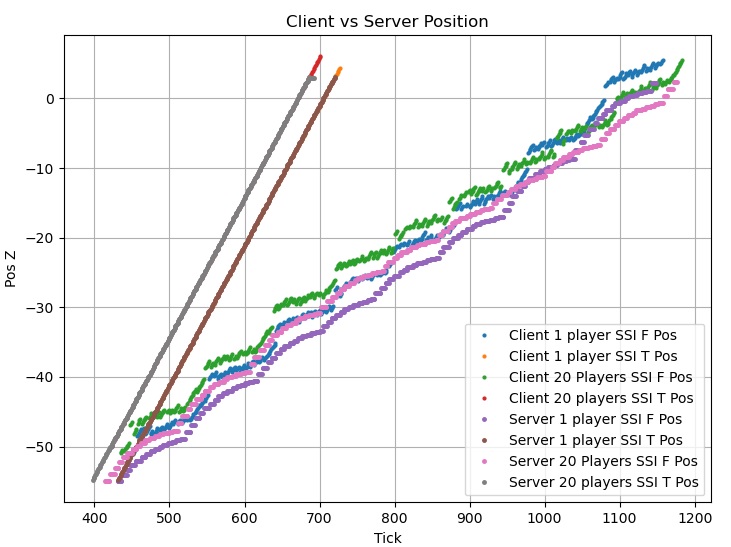

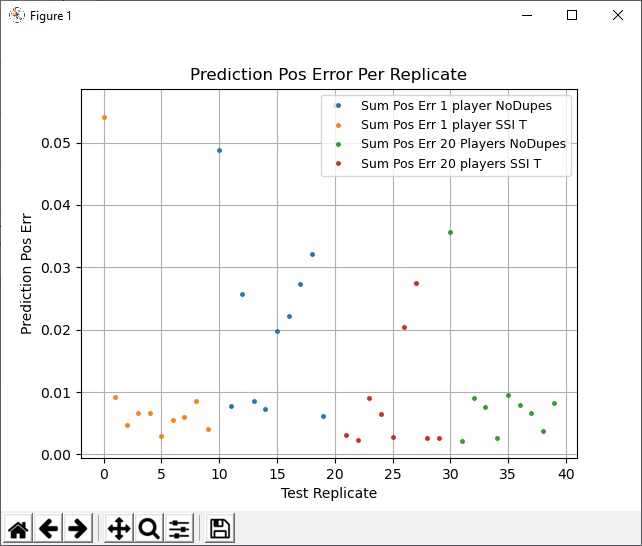

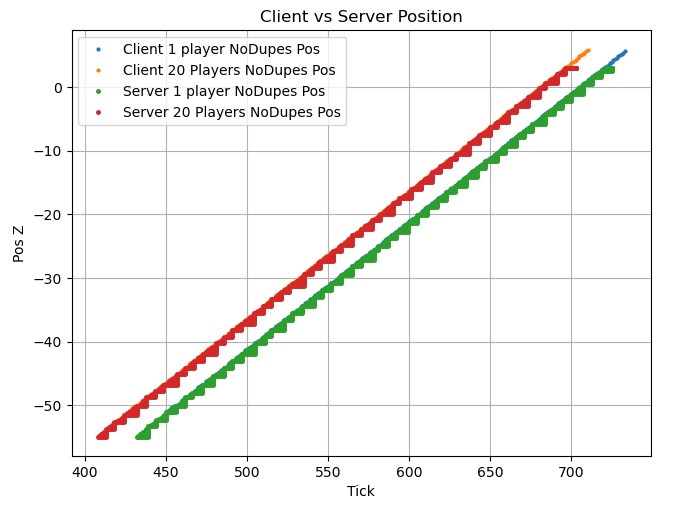

The graph on the left is the normalized position error for each test run of the player moving straight ahead for a fixed distance. The graph on the right is the position along this straight test line of both server and client for each case.

Now with previously undetected bugs fixed, there is a clear difference between the normalized prediction errors with one versus twenty players. And the normalized error is about the same, which is interesting. Additionally, the player movement position curves are all over the place when the server steps outside the inputs loop.

The goal was to maintain game mechanics as much as possible between one and twenty players, and now it is clear that stepping outside the input loop is bad. In hindsight, that is kind of what one would expect, with possible double inputs being applied before the physics is stepped would result in jumps of position and diverging from the straight mechanics line of stepping inside.

So we really want to step inside, but we need to ensure player being updated and their weapon projectiles are the only things moving in the scene during a physics step.

Unity by itself, even utilizing physics scenes, has no real means of isolating one rigid body during a physics step. You can make separate scenes for each player, but the overhead has been noted to bog things down significantly (references in previous post).

What’s left then is to isolate one player by preventing all other players from moving.

Players are “locked” after joining the server and moving to their spawn position. Locking entails caching the current velocity and then setting it to zero. In this way, all players except the one “Unlocked” during their input simulate step, will remain in the same place and maintain mechanics of the game.

After fixing bugs, I wanted to double check that the locking is still performing as intended.

|  |

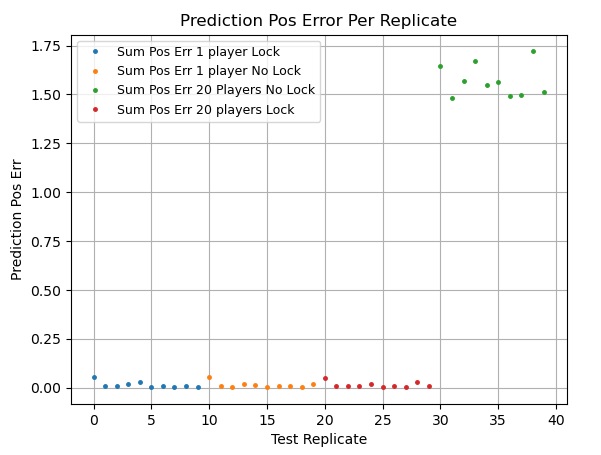

The left graph of prediction error shows how locking with twenty players has a huge effect, bringing the error down to that of one player. The behavior can be seen in more detail on the right hand curve, where the pink and green (server and client) for this case diverge greatly from the straight mechanics and from each other.

The locked cases of one and twenty players remain straight in their mechanics, although they differ slightly from each other. However, compared to the previous post, my conclusion is now seen as wrong, the best case is having the server step inside the client input loop, one for each player input.

Interestingly, the case of one player not locked on the right remains somewhat straight, but the slope begins where the “one player locked case” does and ends where the “twenty player locked case” ends.

Here’s a video comparing a player’s server and client projectiles with nineteen other players and locking vs no locking.

The top clearly shows the server outpaces the client quickly, since the projectile is not locked between server physics steps of all the other players’ inputs. The bottom shows how locking the projectile prevents this problem and (for now) the server follows behind the client as a function of latency.

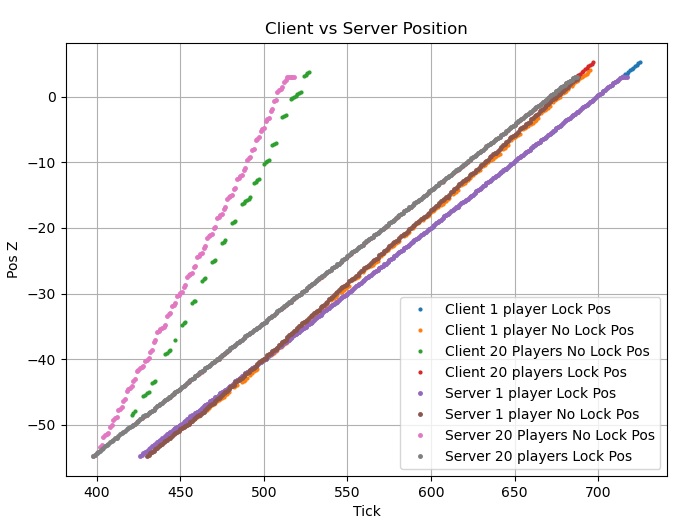

There remains one logical case I hadn’t thought of before. Instead of simply stepping outside or inside the server client input loop, the server could in theory only step for every unique player input. That is, only process the 1st message from each player’s messages received, then step, then go back and continue until all are processed. Although this means more stepping on the server side, I figured it was worth investigating. Here’s the resulting graph.

|  |

Right away I see the errors appear less consistent and more scattered than the simple lock vs no lock case. The effect of the server pausing to continually step over inputs, processing each player’s unique message, until they are gone is introducing additional variation. The position curves on the right are interesting, although the generally follow the control of inside server stepping, they have lobes of processing time. Here’s a close up:

|

Well that would be why, the server has an inconsistent number of messages for each de-duping loop, which results in a variation of delay times between calls. Meanwhile, clients are still predicting ahead, introducing more variation.

New conclusion? The server will process each input message and step the scene separately, only allowing that player and their projectiles to move.