Quantifying Server Client Sync with Selected RigidBodies in Server's Physics Step (Part 1)

In building a relatively fast paced multi player game, I came across all of what I think are common and great references on lag compensation, like this, this, this, and this. I often saw phrases like "but try different techniques to see which is best". What I could not find were suggestions on how to quantify which one worked best for any particular game. In this post I will attempt to communicate such a reporting system, and how I'm using it to try and optimize the game for both multi player and single player.

Quick background, the game is an authoritative server/client model, Unity 2019.2.17f, initially targeting Steam via the Facepunch.Steamworks API. Network messaging is done utilizing this bit compression library's safe version. (I'd love to use the unsafe for speed, but there is a reported bug). Auto simulation is disabled and I use Physics.Simulate and two physics scenes, one for client and one for server (in single player mode).

Multi-player and single-player modes use the same code, and I hijack the single player mode to perform this optimization testing using artificial lag which will go away in production. The client and server each have a reference to each other in the scene, so at message send time they can just call the other's OnP2PData method directly.

//Langue: C#

// send the message

if (!m_bIsSinglePlayer)

{

m_fpClient.Networking.SendP2PPacket(m_steamIDGameServer, bytes, bytes.Length);

}

else

{

if (ARTIFICIAL_LAG_SEC > 0) {

m_uiListSPOutgoingMsgIdxs.Add(m_players[m_iPlayerIndex].m_stMyClient2ServerPlayerUpdate.m_uiMessageIdx);

IEnumerator aCoRo = SendP2PSinglePlayerClient2ServUpdateWithLag(0, bytes,

m_players[m_iPlayerIndex].m_stMyClient2ServerPlayerUpdate.m_uiMessageIdx);

StartCoroutine(aCoRo);

} else

{

m_SinglePlayerServer.OnP2PData(0, bytes, bytes.Length, 0);

}

} // if ! single player

To avoid a race condition with two enum's finishing at the same time (which I found from testing), I also use the list of outgoing message indicies and only send when times up and our message is next, like this:

//Langue: C# // For characterization testing only, hijack single player with artificial lag IEnumerator SendP2PSinglePlayerClient2ServUpdateWithLag(ulong _asteamid, byte[] buffer, uint _msgidx) { float StartTime = Time.time; while (Time.time < StartTime + ARTIFICIAL_LAG_SEC || m_uiListSPOutgoingMsgIdxs[0] != _msgidx) yield return null; m_SinglePlayerServer.OnP2PData(_asteamid, buffer, buffer.Length, 0); m_uiListSPOutgoingMsgIdxs.RemoveAt(0); yield return null; }

So now I can run in single player mode, using all of the messaging, client side prediction, and server side reconciliation for testing without having to host a server and client separately each time and then connect. I'm sure there will be differences, especially when I go to actually host on the steam cloud, but I figure it's the best way to test things out in full multiplayer mode as one person with minimal effort.

The game for single player also has bots, which I hijack to simulate the load on the server of other players, which was kind of where this really useful reference ended for me, with this bit:

"I'll also point out that this example only has one client which simplifies things, if you had multiple clients then you'd need to either a) make sure you only had one player cube enabled on the server when calling Physics.Simulate() or b) if the server has received input for multiple cubes, simulate them all together."

I went with option B here, but there is a big gotcha. From my perspective, you should only simulate clients who have input messages pending when the server does its physics step. Additionally, all inputs should be applied BEFORE a single server physics step is run. I'll explain why I think so, but first a quick aside on how I collect the data to base that decision on.

This reference caught my eye with its graph of server and client positions to report on how their technique worked. My way to perform such reporting basically consists of rigging the game code up with streamwriter Write & WriteLn statements, triggered with static Boolean switches, easily referenced from any class so I can set the reporting flag and recompile.

As things can vary a bit, I wanted to have a standardized and repeatable test to collect data on prediction and lag performance. The test consists of the player moving from a defined transform to a collider. The input message is hijacked and the "forward" flag is set every frame, so he keeps going until he hits. The scene looks like this:

First I wanted to collect and sum up prediction and lag error for one forward walk from start to finish and produce a single number (scalar), then run it 10 times and see the spread.

To make life easier, I define a characterization test class and list of tests, the run through them automatically, teleporting the player from the end back to the starting transform. For some tests I need to stop and re-start the game in the editor, as creating bots has to happen when the server is first launched (right now).

//Langue: C# public class CharacterizationTest { public string m_sName; public bool m_bPauseAtEnd; public CharacterizationTest(string _name, bool _pause) { m_sName = _name; m_bPauseAtEnd = _pause; } }

To start I wanted to compare error between just one player and 20, the max in the game. I defined two tests:

//Langue: C# public static CharacterizationTest[] CHARACTERIZATION_TESTS = new CharacterizationTest[] { new CharacterizationTest("0 bots", true), new CharacterizationTest("19 bots", false) };

And a static method to set various Boolean flags for testing optimization, here I compare 1 player to 20 (me + 19 bots):

//Langue: C# // set our various flags to a given test condition public static void SET_TEST_CONDITIONS(int _TestNo) { switch (_TestNo) { case 0: ARTIFICIAL_LAG_SEC = 0.1f; SERVER_NUM_BOTS = 0; Characterization.TEST_FORWARD_RUN = false; Characterization.TEST_FORWARD = true; Characterization.TEST_ROTATE = false; break; case 1: SET_TEST_CONDITIONS(0); SERVER_NUM_BOTS = 19; break; } }

In order to capture prediction error, I hijack the error computed between server and client positions for the same message index when client receives a server update, like this:

//Langue: C# // For characterizing testing with no client side corrections // we gotta grab the server update position and compare to current to get pos error Server2ClientPlayerUpdate_t serverPlayerUpdateMsg = new Server2ClientPlayerUpdate_t(); // extract it serverPlayerUpdateMsg.FromByteArray(m_Server2ClientUpdate.m_baServer2ClientUpdates, m_Server2ClientUpdate.GetPlayerUpdateByteArrayOffset(m_iPlayerIndex)); // latest server state Vector3 lastProcServerPos = serverPlayerUpdateMsg.m_v3Position; Vector2 lastProcServerRot = serverPlayerUpdateMsg.m_v2EulerAngles; // client prediction input and state history at the same message index as the server last processed Vector3 lastProcClientPos = m_InputHistoryAndState[idx].m_Server2ClientPlayerMsg.m_v3Position; Vector2 lastProcClientRot = m_InputHistoryAndState[idx].m_Server2ClientPlayerMsg.m_v2EulerAngles; // errors - difference between server and client local state - use later for Client Smoothing m_v3PositionError = lastProcServerPos - lastProcClientPos; m_v2RotationError = lastProcServerRot - lastProcClientRot; m_fPositionError = m_v3PositionError.magnitude; m_fTotIntegratedPosError += m_fPositionError;

Then at the end of each test, I dump fully normalized test settings and results, like this:

//Langue: C# public static void DUMP_SCALAR_REPORT() { if (DUMP_SCALAR_REPORT) { // Scalar characterization test output StreamWriter sw = new StreamWriter("Characterization.txt", true); // write the settings DumpTestSettings("", sw, false); // write the output values - correction count and tot pos error sw.WriteLine(m_iCorrectionCount + // future threshold based correction "," + m_fTotIntegratedPosError + // total integrated prediction position error "," + m_fTotIntegratedPosError / CHARACTERIZE_TEST_CLIENT_SERVUPDATE_COUNT + // prediction position error per server update "," + m_iTestNoInputStepCount + // # of server steps without player input "," + m_fTotIntegratedRotError + // total integrated prediction rotation error "," + m_fTotIntegratedRotError / CHARACTERIZE_TEST_CLIENT_SERVUPDATE_COUNT + // prediction rotation error per server update "," + m_fTotIntegratedLagPosError + // total integrated lag error "," + m_fTotIntegratedLagPosError / CHARACTERIZE_TEST_CLIENT_SERVUPDATE_COUNT // lag error per server update ); // close the file sw.Close(); } // dump scalar report } public static void DumpTestSettings(string _prefix, StreamWriter _sw, bool _includeHeaders) { // write our conditions as columns _sw.Write(_prefix + CHARACTERIZATION_TESTS[CURRENT_CONDITION_TEST].m_sName + "," + AVERAGE_FPS_ACHIEVED + "," + CHARACTERIZE_TEST_CLIENT_SERVUPDATE_COUNT + "," + SERVER_NUM_BOTS + "," + ARTIFICIAL_LAG_SEC + "," + ); }

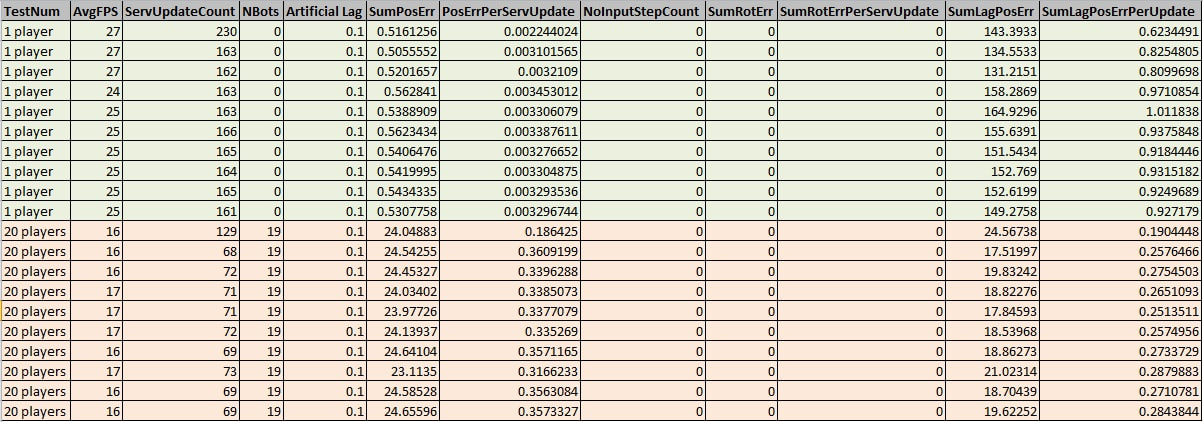

When each test is run 10 times it produces a csv file that looks like this:

To plot the results I used python's matplotlib and colored markers by test, here is the file reading and plotting code, formatted as c#

#Langue: Python import pandas as pd; import matplotlib.pyplot as plt; legendSize = 6 # create subplots fig, (ax1,ax2) = plt.subplots(1,2) #=========================================== # read the scalar files sclr = pd.read_csv('Characterization_players1vs20.csv'); sclrGrps = sclr.groupby('TestNum') # plot the results for name, group in sclrGrps: ax1.plot(group.PosErrPerServUpdate, marker='.', linestyle='', ms=7, label='Pos Err ' + name) # plot the # of server updates on a 2nd y axis ax1_y2 = ax1.twinx() ax1_y2.plot(sclr.ServUpdateCount, marker='', linestyle='dashed', label='Server Update Count') # titles, labels, grid lines, legend ax1.set_title('Prediction Pos Err / Server Update') ax1.set_xlabel('Test Replicate') ax1.set_ylabel('Prediction Pos Err / Server Update') ax1.grid() ax1.legend(prop={'size': legendSize}) #show the graphs fig.show()

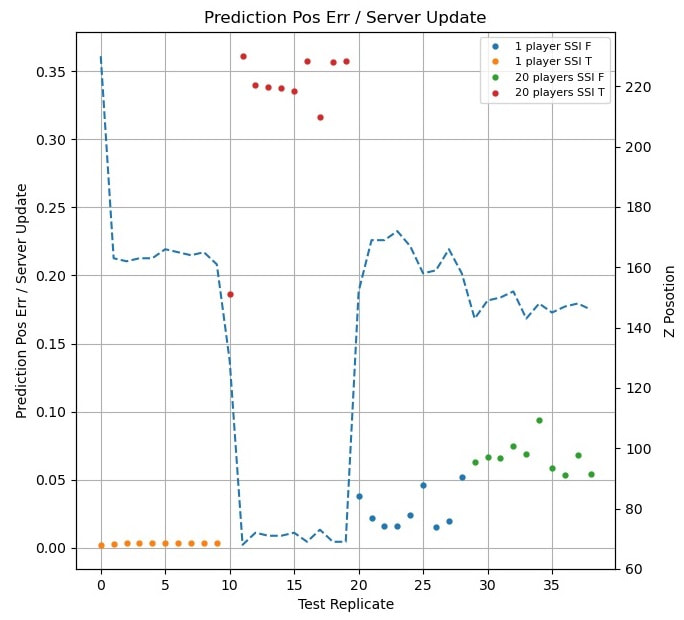

And here is the resulting graph:

This clearly shows that with just one player, the error is very low and consistent and driven primarily by the lack of full precision being sent in messages. (As a separate check, I ran it again but sending full precision and the error goes to zero). But when 19 more players are added, the error shoots up, the server is more bogged down as shown by the dotted line which is the number of server updates experienced in each test. Even though updates go down by half, the error is still way higher.

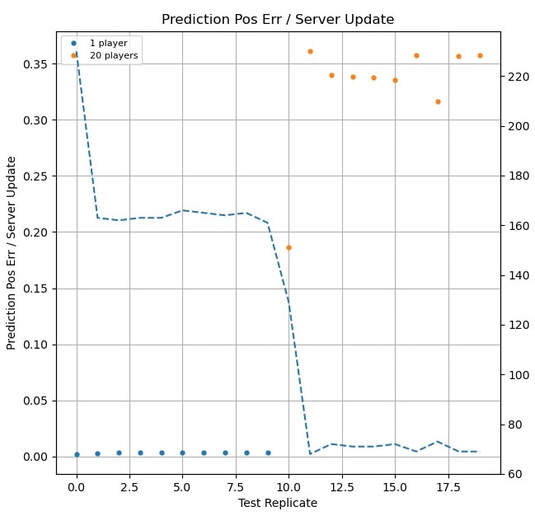

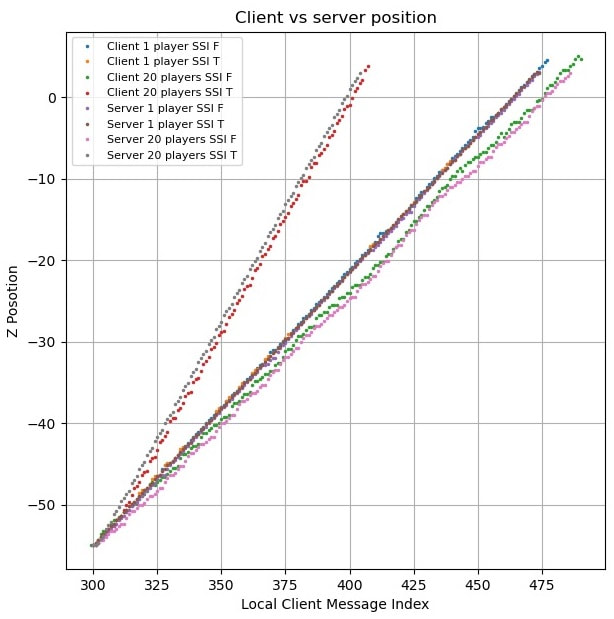

I wanted to see more detail on these two tests, so I rigged up additional streamwriter statements to have the client and server write the current message index, player position, and errors per message (client tick) to a csv file. I won't go into code details, but the logic is similar to the scalar file shown above, except it's a timelapse. Here is the graph of the player's z-position on the client vs the server for both 1 and 20 players:

The server outpacing the client can be seen clearly by comparing it's slope above to that of the 1 player case. The goal is to have the mechanics of the game as independent as possible to the number of players. i.e., the single player slope is "ground truth". My reasoning for why the server is outpacing the client is because the server loop performing updates runs a physics step *inside* the client input loop, which will result in more physics steps on the server side than the client with additional players.

Here is code that is called once per FixedUpdate on the server to process collected client input messages, showing the physics step inside the input loop:

//Langue: C# public void ProcessPlayerUpdates() { // if we have players and input messages, process them if (m_iPlayerCount > 0 && m_ClientUpdateMessages.Count > 0) { // loop through our pending messages and update the players based on each int t_currMessageLen = m_ClientUpdateMessages.Count; // ------------------------------ // Player input message loop for (int idx = 0; idx < t_currMessageLen; idx++) { // Retrieve the client player to server update embedded in the client to server update SSClient2ServerPlayerUpdate_t clientUpdateMsg = new Client2ServerPlayerUpdate_t(); clientUpdateMsg.FromByteArray(m_ClientUpdateMessages[idx].m_bClientUpdateMsg); // make sure it's a valid player slot if (m_PlayerSlotsFilled[clientUpdateMsg.m_iPlayerArrayIdx]) { // call the player update from message m_Players[clientUpdateMsg.m_iPlayerArrayIdx].UpdatePlayerFromClient2ServerUpdate(clientUpdateMsg, m_eGameState); // Update our most recent message index processed if (clientUpdateMsg.m_uiMessageIdx > m_accdClientConnectionData[clientUpdateMsg.m_iPlayerArrayIdx].m_uiLastMessageIdxProcessed) { m_accdClientConnectionData[clientUpdateMsg.m_iPlayerArrayIdx].m_uiLastMessageIdxProcessed = clientUpdateMsg.m_uiMessageIdx; } // step the physics scene m_serverPhysicsScene.Simulate(Time.fixedDeltaTime); } // valid player slot filled } // message loop // now go through and remove messages that are processed for (int idx = t_currMessageLen - 1; idx >= 0; idx--) { m_ClientUpdateMessages.RemoveAt(idx); } // update and send our all player update to all clients SendAllClientsUpdate(); } // if player count > 0 && msg_count > 0 } // Process Player Update

In researching others who have encountered this kind of thing, I came across this great post, specifically this snippet of a reply from the author to a comment:

"You cannot do clientside prediction with rigidbodies, since you can't manually step a rigidbody."

I think this post was written before physics scenes were available in unity, but that doesn't completely solve the issue. You can step a rigidbody in theory, but it might require a separate physics scene for each one, and from the references post above that can bog things down, plus if your game has interactions and such with other players or game static things, that adds additional overhead.

Instead of manually stepping an individual rigidbody, the idea here is to *prevent* an individual rigidbody from being updated during a physics.simulate call. “Locking” a player who has no input during the server step would in theory bring the 20 player position curve above towards the single player line, assuming that the physics step is now outside the input message loop.

The player script has a new method to implement this locking and unlocking:

//Langue: C# // player class // ... public Vector3 m_v3PreLockPosition; public Vector3 m_v3PreLockVelocity; public bool m_bIsLocked; // Lock // enable or disable the player from being affected by physics updates public void Lock(bool _lockMe) { // make sure we aren't locking to the same thing again if ((_lockMe && m_bIsLocked) || (!_lockMe && !m_bIsLocked)) return; // store or restore player velocity & position switch (_lockMe) { case true: // Cache rigidbody velocity and position m_v3PreLockVelocity = m_rb.velocity; m_v3PreLockPosition = transform.position; //mark locked m_bIsLocked = true; break; case false: // Restore rigidbody velocity & position from cache m_rb.position = m_v3PreLockPosition; m_rb.velocity = m_v3PreLockVelocity; // mark unlocked m_bIsLocked = false; break; } }

Two new test cases are added, with a new Boolean flag "SERVER_STEP_INSIDE_INPUTS_LOOP":

//Langue: C# // set our various flags to a given test condition public static void SET_TEST_CONDITIONS(int _TestNo) { switch (_TestNo) { case 0: ARTIFICIAL_LAG_SEC = 0.1f; SERVER_NUM_BOTS = 0; SERVER_STEP_INSIDE_INPUTS_LOOP = true; Characterization.TEST_FORWARD_RUN = false; Characterization.TEST_FORWARD = true; Characterization.TEST_ROTATE = false; break; case 1: SET_TEST_CONDITIONS(0); SERVER_NUM_BOTS = 19; break; case 2: SET_TEST_CONDITIONS(0); SERVER_STEP_INSIDE_INPUTS_LOOP = false; break; case 3: SET_TEST_CONDITIONS(0); SERVER_NUM_BOTS = 19; SERVER_STEP_INSIDE_INPUTS_LOOP = false; break; } }

The server method to process all pending player input messages gets updated to incorporate the new test switch and locking strategy, like this:

//Langue: C# public void ProcessPlayerUpdates() { // if we have players and input messages, process them if (m_iPlayerCount > 0 && m_ClientUpdateMessages.Count > 0) { // loop through our pending messages and update the players based on each int t_currMessageLen = m_ClientUpdateMessages.Count; // ------------------------------ // Player input message loop for (int idx = 0; idx < t_currMessageLen; idx++) { // Retrieve the client player to server update embedded in the client to server update SSClient2ServerPlayerUpdate_t clientUpdateMsg = new Client2ServerPlayerUpdate_t(); clientUpdateMsg.FromByteArray(m_ClientUpdateMessages[idx].m_bClientUpdateMsg); // make sure it's a valid player slot if (m_PlayerSlotsFilled[clientUpdateMsg.m_iPlayerArrayIdx]) { // unlock the player if !SERVER_STEP_INSIDE_INPUTS_LOOP if (!SERVER_STEP_INSIDE_INPUTS_LOOP) { m_Players[clientUpdateMsg.m_iPlayerArrayIdx].Lock(false); } // call the player update from message m_Players[clientUpdateMsg.m_iPlayerArrayIdx].UpdatePlayerFromClient2ServerUpdate(clientUpdateMsg, m_eGameState); // Update our most recent message index processed if (clientUpdateMsg.m_uiMessageIdx > m_accdClientConnectionData[clientUpdateMsg.m_iPlayerArrayIdx].m_uiLastMessageIdxProcessed) { m_accdClientConnectionData[clientUpdateMsg.m_iPlayerArrayIdx].m_uiLastMessageIdxProcessed = clientUpdateMsg.m_uiMessageIdx; } // step the physics scene if SERVER_STEP_INSIDE_INPUTS_LOOP if (SERVER_STEP_INSIDE_INPUTS_LOOP) { m_serverPhysicsScene.Simulate(Time.fixedDeltaTime); } } // valid player slot filled } // message loop // Physics step outside if !SERVER_STEP_INSIDE_INPUTS_LOOP if (!SERVER_STEP_INSIDE_INPUTS_LOOP) { // step the physics scene outside the input loop m_serverPhysicsScene.Simulate(Time.fixedDeltaTime); // re-lock all players who were unlocked, optimize this for (int i = 0; i < m_Players.Length; i++) if (!m_Players[i].IsLocked()) m_Players[i].Lock(true); } // now go through and remove messages that are processed for (int idx = t_currMessageLen - 1; idx >= 0; idx--) { m_ClientUpdateMessages.RemoveAt(idx); } // update and send our all player update to all clients SendAllClientsUpdate(); } // if player count > 0 && msg_count > 0 } // Process Player Update

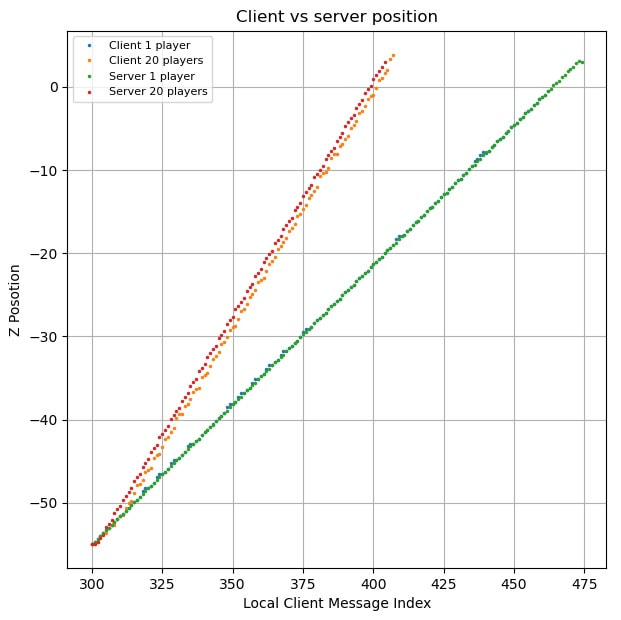

After running the new test cases 10 times each to gather errors, here is the result:

And here is the graph of server vs client position:

As the data shows, the locking of players combined with the server step happening *outside* the input loop runs closer to the "ground truth" of one player with the server step *inside* the input loop. Combined with a lower prediction error this is the setup moving forward for multi player for my game.

Up next is characterizing rotation combined with moving forward, to simulate actual gameplay a bit more.